Security of IT Systems With Public Clouds

When talking to people responsible for IT in organizations, I often encounter fears about the cloud transition related to system security. How can we be sure that by implementing cloud solutions or migrating existing ones to the cloud, we will not expose our systems to new threats and increase the risk of unauthorized persons gaining access to our company secrets? I will try to answer these questions below.

Is the public cloud public?

In organizations just beginning their adventure with cloud solutions, the mere word “public” already evokes associations with public access to data. However, “public cloud” does not refer to data confidentiality, but rather to the availability of cloud services. As long as we do not openly share data or systems located in the cloud, no one else will have access to them. Unlike private clouds, which are built entirely for the use of one company or organization, public cloud providers can be used by anyone – from individual users to small and medium-sized enterprises to large corporations and government agencies. The same cloud services are generally available, with the same capabilities, parameters and configuration options, to all of these entities.

Among other things, the widespread availability of services means that security is a top priority for leading cloud providers. This is not only because of their concern for good reputation, which is crucial in conquering the rapidly growing market for cloud services, but also because serious breaches of data security for large cloud customers may translate into high financial losses and legal consequences. Taking into account the operating model of cloud providers based on self-service and consistent development of one, available for everyone, platform, it is clear that high security standards benefit all users.

Who is responsible for the security of the cloud?

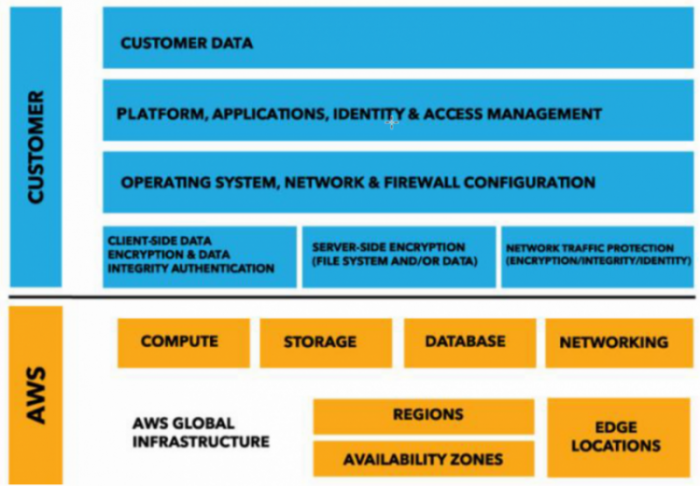

It’s important to understand how responsibility for data security functions. Keep in mind that the cloud provider is responsible for “cloud security”, i.e., security of physical locations, redundancy, control of access to servers, security of operation of the services themselves (e.g., updates of database servers underpinning database services), or separation between clients at the level of virtualization. The user is responsible for security “in the cloud”. This phrase means that data security also depends on the configuration of cloud services set up by users, who, using the tools, knowledge and best practices available from the provider and its partners, decide about the level of security appropriate for their business. In this case, properly configured default values that reduce the risk of errors (the so-called “deny all by default” policy) are also very helpful.

Amazon Web Services, a pioneer and leader in Infrastructure as a Service in the development of the Cloud Native Policy, applies a shared responsibility model:

Two key factors:

- Maturity of the cloud – quality of security, configuration possibilities, available tools, their sophistication and flexibility

- Knowledge of these tools and experience in building secure IT systems

Both are equally important and need to be addressed when taking the decision to begin using cloud solutions.

How to compare data security in the cloud and on-premises?

When collecting requirements for the implementation of a new e-commerce system, I often hear “the system should be run in our server room, because it will be safer this way”. So I always ask the question “What facts is this belief based on?”. I suggest you examine this on your own and compare the possibilities offered by Amazon Web Services with the capacities of your own organization. Let’s divide the security issue into two sets of issues:

Physical safety

Undoubtedly, the global AWS infrastructure is unique in terms of both scale and the concept of building regions. A region typically consists of three so-called Availability Zones, far enough away from each other to reduce vulnerability to the same natural disasters, yet close enough to ensure rapid synchronization of large amounts of data between them. Each Availability Zone is at least one independent data center. This architecture makes it possible, above all, to provide very high data resilience to “force majeure”, an extreme example of which is the S3 service, which has been in operation since 2006 and is designed to ensure data durability of 99.999999999%.

While it’s not possible to personally see the server where the data is stored, it’s also not the case that data placed in the cloud is in an undefined space that can be made available to random users in an uncontrolled way. Data will never leave a given region without the cloud user’s knowledge, so we always know in which country and where it is located. Yes, I sometimes meet decision-makers for whom the widely recognized certifications are not enough to confirm that the cloud meets the highest standards and that there are no surprises lurking underneath. Such people apparently have more confidence in the “local IT specialist” than in the standardization and audit system. Interestingly, such thinking often goes hand in hand with the fear of vendor lock-in regarding the cloud provider, while at the same time making them dependent on the competences of specialists responsible for infrastructure and on-premises systems, who are becoming increasingly more expensive and more difficult to access on the market. This is a natural fear of something distant and intangible, but is it a legitimate fear?

Network security

Reflecting on projects I’ve dealt with, I can say that, as a rule, IT departments either do everything possible to protect their network and systems from contact with the outside world for as long as possible, or, under the pressure of new business requirements, they expose systems to the world without the appropriate level of security, or even mental comfort of the team. Both approaches are completely understandable if we take into account the one-time cost of implementing a firewall on-premises.. Oh, and there’s one more pattern that occurs: we deploy an expensive firewall, but there is insufficient energy and resources to maintain its security level from the day of deployment.

What can we have in the cloud for that? Let’s review:

- Console (Webapp) – the first point of contact with the cloud, access only after encrypted transmission, and if we follow the recommendations appearing in the console, then also with two-stage authorization.

- Simple firewall – by default we get a private network segment inaccessible by all unauthorized persons (e.g., based on IP address and port).

- VPN connection – if we need more internal network integration with the cloud segment (and, e.g., less restrictive filtering policies for users from inside the company network).

- Protection against DDoS attacks – all AWS users benefit from the basic DDoS protection provided by AWS Shield Standard, and services such as Elastic Load Balancer and Amazon CloudFront, so that just by running an application using a load balancer or connecting a CDN, we obtain protection against attacks such as SynFlood at no additional cost (see how quickly we managed to run CDN for one of our clients); for more demanding users, AWS Shield Advanced provides tools to protect other services.

- Web Application Firewall – additional protection of applications against exploitation, scanning with the possibility of configuring own rules, at a cost proportional to the intensity of use.

If we add the available tools for detailed access control of our data and applications (AWS CloudTrail), the unachievable cost of long-term data storage on our own resources, it’s hard to avoid the conclusion that AWS has a full set of tools to ensure security for our systems. The question remains…

…how do you maintain security in the cloud?

At the beginning, it is best to find a partner who will help you to carry out your first projects and build your organization’s competences. As a rule, AWS does not carry out projects on its own, but instead supports a network of partners specializing in a particular industry, type of solution or technology. Some support tools are also available to end users, for example:

- Training and certification

- AWS Well-Architected Tool, i.e. a set of best current practices in the field of building systems in AWS (addressing, e.g., security)

- AWS Trusted Advisor – a tool that generates recommendations for changes based on comparing our cloud configuration with the recommendations

Summary

Personally, I am sure that concerns about the security of data or systems in the cloud are mainly due to low awareness. Taking an “engineering” approach to the topic: there is no reason to believe that the cloud is by definition more or less secure, and at the same time it clearly facilitates access to tools and practices that enable the construction of systems no less secure than on-premises solutions. A good cloud provides highly standardized services built on the basis of the provider’s many years of experience, where each service is developed by a group of very narrowly specialized experts. This level of competence and operational excellence is beyond the reach of the vast majority of organizations.